Life & Island Times: Stop Us Before We Innovate Again

Editor’s Note: Isaac Asimov’s Foundation novel was created from a series of eight short stories published in Astounding Magazine between May 1942 and January 1950.

It was heady stuff. I found them in a box of science fiction magazines at rummage sale at the Unitarian Church on Woodward Avenue in suburban Detroit where pastor Bob Marshall, a bookstore owner, held court. They were original pulp, and did not survive more than a couple readings before disintigrating.

According to author Isaac Asimov, the premise to the series was based on ideas set forth in Edward Gibbon’s History of the Decline and Fall of the Roman Empire, and was invented spontaneously on his way to meet with editor John W. Campbell, with whom he developed the concepts of the collapse of the Galactic Empire. Asimov wrote these early stories in his West Philadelphia apartment when he worked at the Philadelphia Naval Yard. With Robert A. Heinlein’s ‘future history’ novels, also influenced by Campbell, this was the beginning of my political understanding. For good or for ill.

– Vic

July 21 2017

Stop Us Before We Innovate Again

Coastal Empire

This weekend’s online Wall Street Journal contained an article about the dangers of our aborning age of Artificial Intelligence (AI). The piece centered around the musings of the tech world’s current number one darling, SpaceX’s and TESLA’s founder, Elon Musk. It was as if Elon was blowing on a dog whistle. We mutts are supposed to start barking.

Well, here goes. Woof. Woof.

Musk is not alone in voicing AI-cautionary sentiments. “Neo-Luddites” Stephen Hawking and Bill Gates have done much the same in past years.

So what’s the deal? Technological innovation is now central to economic growth and prosperity. How does one innovate responsibly is essentially what he is asking. According to the article, Musk wants government policy and regulatory agency to come to the rescue. Excuse me as I barf while recalling Smoot Hawley, NAFTA, the Department of Education, and other great American government policy and agency triumphs.

More seriously, Musk’s approach and goal here could be seen as a subtle pushing of a camel’s nose under the tent. For example, as the marriage of AI and robotics disrupts future labor markets with hundreds of millions being out of work or underworked world wide, then the government in charge of such must help the techies by establishing new wealth transfer programs to lessen the side effects of this brave new world. Innovation means living longer thanks to biotech, having less to do thanks to the convergence of AI and robotics, and having less of a chance to be successfully creative and meaningful thanks to AI and big data algos

To some, it’s almost is if Elon is photoshopping a group picture of himself and his fellow privileged Silicon Valley innovators from being pictured as the hunters into the hunted.

Stop us from suiciding a better living world through innovation, he seems to be saying.

One might counter Musk with In for a disruptive dime, in for destroyed world dollar. Today’s hyper-innovation means perforce that its returns to capital will exponentially dwarf its returns to labor.

To his credit, Musk put his money where his mouth is regarding his AI fears by launching several years ago an open AI initiative – in effect accelerating the development of AI in the hopes that the more people are involved, the more responsible it’ll be. To some this smacks of getting more free research and stuff for the smart and well capitalized Valley guys.

Open AI is certainly a novel approach. However, it adheres to the belief that the answer to technology innovation is . . . (wait for it) . . . more and faster technology innovation.

This type of innovation explosion is different than previous ones like the industrial and information revolutions. It is human evolution altering.

For the first time in human history, man can design and engineer the stuff around him at the level of the very atoms it’s made of. He is redesigning and reprogramming the DNA at the core of every living organism. Our creation of artificial systems that match if not surpass human intelligence exceeds our ability to foresee its consequences. But the innovators counter by saying they can connect ideas, people and devices together faster and with more complexity than ever before.

Musk and his fellow innovators would do well to start by hewing to some, if not all, of the below revised and extended versions of Isaac Asimov’s Laws of Robotics:

AI entities may not injure a human being or, through inaction, allow a human being to come to harm.

AI entities must obey the orders given it by human beings except where such orders would conflict with the First Law.

AI entities must protect their own existence as long as such protection does not conflict with the First or Second Laws.

AI entities may not harm humanity, or, by inaction, allow humanity to come to harm.

AI entities shall partner and cooperate with human beings as long as such cooperation and partnership does not conflict with the previous laws.

Robots like Roomba and artificial intelligence enabled weapon systems like those in use at DoD do not currently contain or obey these laws. Their human creators must choose to program them in, and devise a means to do so.

The development of AI is a business, and businesses are notoriously uninterested in fundamental safeguards, especially philosophic ones. History is instructive here. Neither the tobacco industry, the automotive industry, or the nuclear power industry employed any meaningful safeguards initially. They resisted externally imposed safeguards. None of them has yet accepted an absolute edict against ever causing harm to humans. Safeguards are expensive, increase product complexity and do not contribute to profitability or getting a company to its IPO. Bottom line: the AI companies won’t develop AI-law compliant products on their own.

So, let’s assume the government tries to control this situation via policy and regulation. AI technology leaders might just move offshore and forsake the US market for the time being. More importantly, the world’s current economy is a constantly evolving ecosystem in which participants seek to change and adapt to their environment, which itself is evolving due to new technology and innovation, as well as shifts in investor and consumer behaviors. How can any government hope to write rules for such a competitive maw, let alone police it? Bottom line: government control won’t work very well.

Furthermore, even if we humans were to perfectly control the integration of AI Laws into humanity’s convergence with its AI machine partners, the worst long-term harm might come from obeying these AI laws perfectly, thereby depriving humanity of inventive or risk-taking behavior.

I guess we gotta leave some room to let the dogs out now and then.

Woof. Woof.

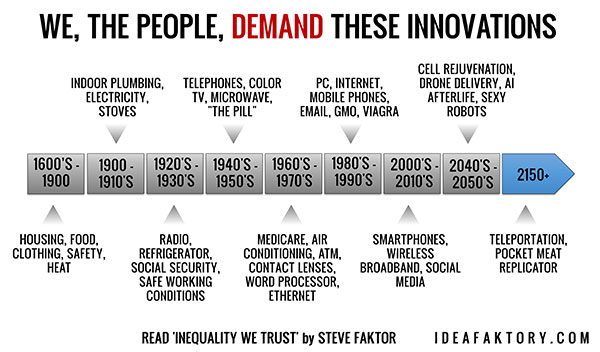

Meanwhile, chew on this graphic, my fellow meat-loving dogs.

Copyright © 2017 From My Isle Seat

www.vicsocotra.com